Running a site in the cloud and paying for CPU time with pennies and fractions of pennies is a fascinating way to profile your app. I mean, we all should be profiling our apps, right? Really paying attention to what the CPU does and how many database connections are main, what memory usage is like, but we don't. If that code doesn't affect your pocketbook directly, you're less likely to bother.

Interestingly, I find myself performing optimizations driving my hybrid car or dealing with my smartphone's limited data plan. When resources are truly scarce and (most importantly) money is on the line, one finds ways - both clever and obvious - to optimize and cut costs.

Sloppy Code in the Cloud Costs Money

I have a MSDN Subscription which includes quite a bit of free Azure Cloud time. Make sure you've turned this on if you have MSDN yourself. This subscription is under my personal account and I pay if it goes over (I don't get a free pass just because I work for the cloud group) so I want to pay as little as possible if I can.One of the classic and obvious rules of scaling a system is "do less, and you can do more of it." When you apply this idea to the money you're paying to host your system in the cloud, you want to do as little as possible to stretch your dollar. You pay pennies for CPU time, pennies for bandwidth and pennies for database access - but it adds up. If you're opening database connection a loop, transferring more data than is necessary, you'll pay.

I recently worked with designer Jin Yang and redesigned this blog, made a new home page, and the Hanselminutes Podcast site. In the process we had the idea for a more personal archives page that makes the eponymous show less about me and visually more about the guests. I'm in the process of going through 360+ shows and getting pictures of the guests for each one.

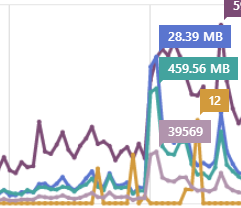

I launched the site and I think it looks great. However, I noticed immediately that the Data Out was REALLY high compared to the old site. I host the MP3s elsewhere, but the images put out almost 500 megs in just hours after the new site was launched.

You can guess from the figure when I launched the new site.

I *rarely* have to pay for extra bandwidth, but this wasn't looking good. One of the nice things about Azure is that you can view your bill any day, not just at the end of the month. I could see that I was inching up on the outgoing bandwidth. At this rate, I was going to have to pay extra at the end of the month.

I thought about it, then realized, duh, I'm loading 360+ images every time someone hits the archives page. It's obvious, of course, in retrospect. But remember that I moved my site into the cloud for two reasons.

- Save money

- Scale quickly when I need to

ASIDE: It's on my list to add "topic tagging" and client-side sorting and filtering for the shows on Hanselminutes. I think this will be a nice addition to the back catalog. I'm also looking at better ways to utilize the growing transcript library. Any thoughts on that?The easy solution was to lazy load the images as the user scrolls, thereby only using bandwidth for the images you see. I looked at Mike Tupola's jQuery LazyLoad plugin as well as a number of other similar scripts. There's also Luis Almeida's lightweight Unveil if you want fewer bells and whistles. I ended up using the standard Lazy Load.

Implementation was trivial. Add the script:

<script src="//ajax.aspnetcdn.com/ajax/jQuery/jquery-1.7.2.min.js" type="text/javascript"></script> <script src="/jquery.lazyload.min.js" type="text/javascript"></script>Then, give the lazy load plugin a selector. You can say "just images in this div" or "just images with this class" however you like. I chose to do this:

<script>

$(function() {

$("img.lazy").lazyload({effect: "fadeIn"});

});

</script>The most important part is that the img element that you generate should include a TINY image for the src. That src= will always be loaded, no matter what, since that's what browsers do. Don't believe any lazy loading solution that says otherwise. I use the 1px gray image from the github repo. Also, if you can, set the final height and width of the image you're loading to ease layout.<a href="/363/html5-javascript-chrome-and-the-web-platform-with-paul-irish" class="showCard">

<img data-original="/images/shows/363.jpg" class="lazy" src="/images/grey.gif" width="212" height="212" alt="HTML5, JavaScript, Chrome and the Web Platform with Paul Irish" />

<span class="shownumber">363</span>

<div class="overlay">HTML5, JavaScript, Chrome and the Web Platform with Paul Irish</div>

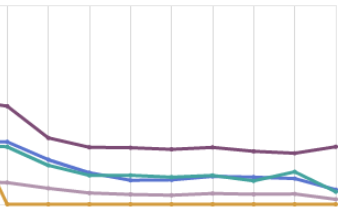

</a>The image you're going to ultimately load is in the data-original attribute. It will be loaded when the area when the image is supposed to be enters the current viewport. You can set the threshold and cause the images to load a littler earlier if you prefer, like perhaps 200 pixels before it's visible.$("img.lazy").lazyload({ threshold : 200 });After I added this change, I let it run for a day and it chilled out my server completely. There isn't that intense burst of 300+ requests for images and bandwidth is way down.

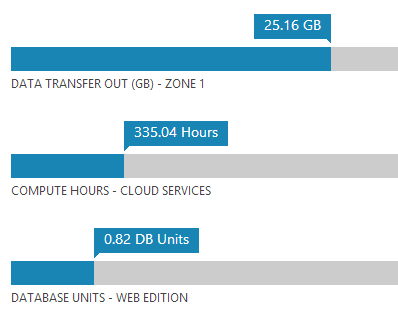

10 Websites in 1 VM vs 10 Websites in Shared Mode

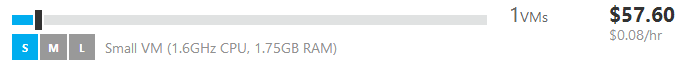

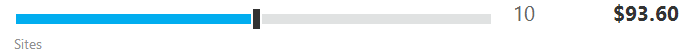

I'm running 10 websites on Azure, currently. One of the things that isn't obvious (unless you read) in the pricing calculator is that when you switch to a Reserved Instances on one of your Azure Websites, all of your other shared sites within that datacenter will jump into that reserved VM. You can actually run up to 100 (!) websites in that VM instance and this ends up saving you money.Aside: It's nice also that these websites are 'in' that one VM, but I don't need to manage or ever think about that VM. Azure Websites sits on top of the VM and it's automatically updated and managed for me. I deploy to some of these websites with Git, some with Web Deploy, and some I update via FTP. I can also scale the VM dynamically and all the Websites get the benefit.Think about it this way, running 1 Small VM on Azure with up to 100 websites (again, I have 10 today)...

...is cheaper than running those same 10 websites in Shared Mode.

This pricing is as of March 30, 2013 for those of you in the future.

The point here being, you can squeeze a LOT out of a small VM, and I plan to keep squeezing, caching, and optimizing as much as I can so I can pay as little as possible in the Cloud. If I can get this VM to do less, then I will be able to run more sites on it and save money.

The result of all this is that I'm starting to code differently now that I can see the pennies add up based on a specific changes.

© 2013 Scott Hanselman. All rights reserved.

DIGITAL JUICE

No comments:

Post a Comment

Thank's!