It's from a log time now that I wanted to share the following stuff, but

running time was always against me.. ;-)

I'd say that during last

years there were plenty incredible events around MySQL Performance. And

one of them is a story about Read-Only performance in MySQL 5.6 -- it's

quite fun to tell it now, but, believe me, it's even more fun to live it

yourself ;-)) Every important milestone around performance improvement

in MySQL was similar to resolve a Detective Story ;-) Probably one day

somebody will take a time to write a book about ;-)) But for my part,

I'm just sharing what I've found interesting and important..

First

of all: why even care about Read-Only performance in MySQL/InnoDB ?.. -

Well, except if your database is "write-only", but usually data reads

are representing a significant part in OLTP activity. And if your

database engine is transactional, the reads from such a database

are not "simple reads" -- they will also be a part of transactions,

hitting different level constrains to match committed-only data or

accept "dirty reads", etc. etc. etc. -- just to say that it's not as

simple as it looks like ;-)) And on the same time read-only or

read-dominated performance represents very significantly an overall

database engine design, as it'll fire the most critical internal

bottlenecks and give us an idea about its true scalability limits..

NOTE:

I'm not covering here IO-bound workloads.. - it'll be already quite a

long article, so I'm trying to make it shorter ;-)

(then, as you

know, since you become IO-bound, your database performance is driven by

your storage performance since then.. - and any DBA will simply advise

you to buy a faster storage or more RAM ;-)) -- so, for the moment I'll

worry about such a guy who has enough of RAM and still hitting

performance/scalability issues ;-))

Split of the kernel_mutex

So

far, it's more than a year ago there was shipped the very first working

MySQL 5.6 version. It was in April 2011, MySQL 5.6.2 came with one of

fundamental changes -- kernel_mutex contention was finally removed

within InnoDB!.. This contention was seen as the main scalability

stopper on many kind of workloads (including read-only). And Sunny made

an incredible work to split kernel_mutex into several mutexes within

InnoDB! The result of this work looked very promising. However, there is

always a risk that by resolving one contention, you're also making

another one more significant (which was simply hidden for the being time

by the first one, which was more important until now).. - And it's

exactly what happened in MySQL 5.6.2: instead of kernel_mutex contention

we moved now to the TRX mutex contention (trx_sys), and in some

particular cases performance became even worse than before.. You may

find more details about in my MySQL

5.6 notes articles.

But well, the only reason for problems to

exist is to be fixed, isn't it?.. ;-))

Introducing of

READ-ONLY transactions

TRX mutex is mainly protecting data

related to transactions within InnoDB code. While, when a part of your

database activity is read-only, the question is coming in mind: is it

possible to simplify little bit all this transactional overhead for a

read-only operations?.. - And Sunny again came with a brilliant idea to

introduce a new type of transactions within InnoDB - READ-ONLY

TRANSACTIONS! - I think that his new feature was little bit missed

within all other new improvements, while it was the first true and

visible progress in MySQL 5.6 performance improvement! ;-))

How

it works?..

if you're using auto-commit turned ON, there is even nothing to do,

InnoDB will do all the stuff "automagically" for you! ;-)) -- if

you're not using BEGIN/END/FOR UPDATE in your SELECT queries, they

will be automatically turned into READ-ONLY transactions and most of

transactional overhead will be avoided during your SELECT execution!

otherwise you may explicitly START TRANSACTION READ ONLY and get the

same performance benefit for your SELECT queries!

For more details, please read the Sunny's

article explaining all the related stuff and presenting impressive

benchmark results on Sysbench!

By the end of 2011 year we've

shipped MySQL 5.6.4 including all these new features, and MySQL 5.6

started to look better comparing to 5.5 even on my dbSTRESS

tests ;-))

However, "better" is not enough.. What we're

expecting from MySQL 5.6 is to be "way better" than any previous release

;-))

What's next?..

Then we entered into the most

painful period, when you're asking yourself several times per day "do you

really see what you see?.." and "do you even believe to what you see?.."

To

get it by order:

since introduction of READ-ONLY transactions, near no other/new

contention was reported by InnoDB during read-only workloads..

however, we were still unable to significantly out-pass 16 concurrent

users sessions performance on servers with more than 16cores..

it was clear there is still a contention somewhere in the MySQL or

InnoDB code..

and on this step we were really happy to use PERFORMANCE SCHEMA (PFS)

as it's the only way to trace a time spent on every levels of MySQL

code (particularly on mutexes and RW-locks in the given case)..

monitoring with PFS clearly indicated that the most of time is spent

not on the LOCK_open mutex and MDL related locks!

by chance, Mikael already started to work with Dmitry Lenev to remove

LOCK_open contention, but the final solution was not ready yet on that

time..

to get an idea about potential gain in MySQL 5.6 performance once the

LOCK_open mutex contention will be fixed, Dmitry made a "dirty patch"

removing completely the use of LOCK_open and/or all MDL related stuff

(just for testing propose only)..

And?.... ;-)

As you may imagine, I've expected to see performance way higher once both

patches were applied... - but instead I've observed the following on a

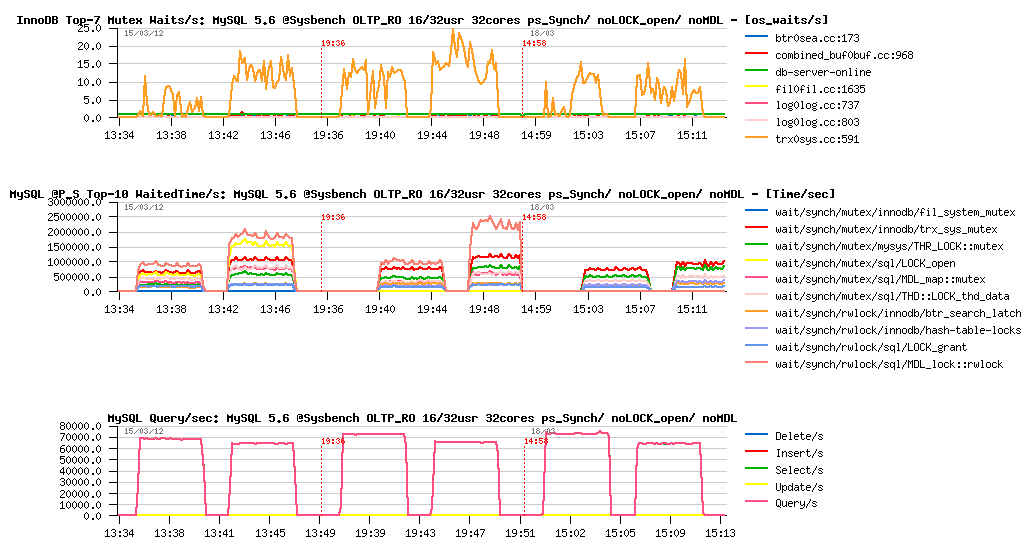

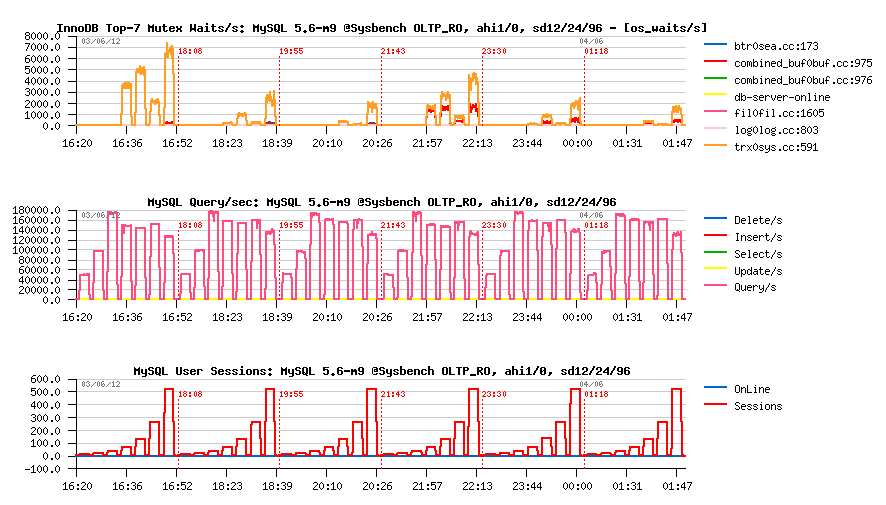

simple Sysbench OLTP_RO test:

Test case :

32cores server

Sysbench OLTP_RO workload

each test series is executed with 16 and then with 32 concurrent users

sessions (threads)

test series:

#1 -> MySQL 5.6 original

#2 -> #1 + patch removing any use of LOCK_open mutex

#3 -> #2 + patch removing any use of MDL related locks

Results :

Observations

:

as you can see, within all test series QPS level is exactly the same!..

from the InnoDB mutex waits you may see that within all test series

the main wait is on trx_sys mutex (but looking on the numbers you'll

also see that it's a very low wait ;-)

however, according PFS, time spent on trx_sys mutex is quite

important..

and then from PFS about test series:

#1 - both LOCK_open and MDL_lock times are present and they are

top waited times

#2 - LOCK_open time is disappeared, while MDL_lock is still

present (as expected)

#3 - both LOCK_open and MDL_lock times are gone.. - but

performance still remained the same?.. - how it's possible?..

I'd admit that during this time I've even traced PFS code to see if what

it's reporting is really matching the reality ;-))

All these and

other problems we placed into agenda of our MySQL Performance meeting,

which this year took place in Paris.. And this meeting was a full success.

After one week of brainstorming and common efforts, we finally found the

root problem of all observed bottlenecks.. ;-))

G5 patch

This

is one of the cases when a database development is meeting HPC level

problems ;-)) I've already dedicated a full

article for the story of this remarkable improvement, will just note

here that bottleneck was related to CPU cache line misses, and once fixed

- performance was improved at least by 50% even on the less sensible HW,

while on some workloads we even reached x6 times(!) better

performance ;-))

The codename "G5" was assigned to this performance

patch to reference the list of all improvement applied to MySQL 5.6

related to CPU caches. And since this critical point, our life finally

moved to a normal way -- we moved back to true contentions as reported by

InnoDB and Performance Schema ;-))

What's next?..

Of

course, the most important improvement now was expected from the LOCK_open

and MDL patch. However, once again, for our big surprises, removing any

use of LOCK_open & MDL stuff did not bring any changes... Why?.. - a more

detailed analyze with PFS showed that the most bigger amount of time was

now again spent within TRX mutex contention! And it became quite clear,

that until TRX mutex is not fixed, there will be no any benefit at all

from LOCK_open and MDL fixes..

InnoDB spin wait delay setting is

coming in the game

I'd say it was little bit frustrating to

observe so high TRX contention even after so impressive speed-ups in

already achieved performance levels.. But well, Sunny again, by analyzing

code around mutex contention came with conclusion that increasing of wait

delays during mutex spins will help to lower observed contentions! -

Interesting that innodb_spin_wait_delay setting variable existed

from a long date within InnoDB, but I never saw it helping to reduce any

mutex contention before ;-) But now, since we came back to pure code

contentions, this setting started to shine again!

I've spent an

amount of time to analyze it, and to demonstrate the impact of this

setting in MySQL 5.6 let me present you a short test result:

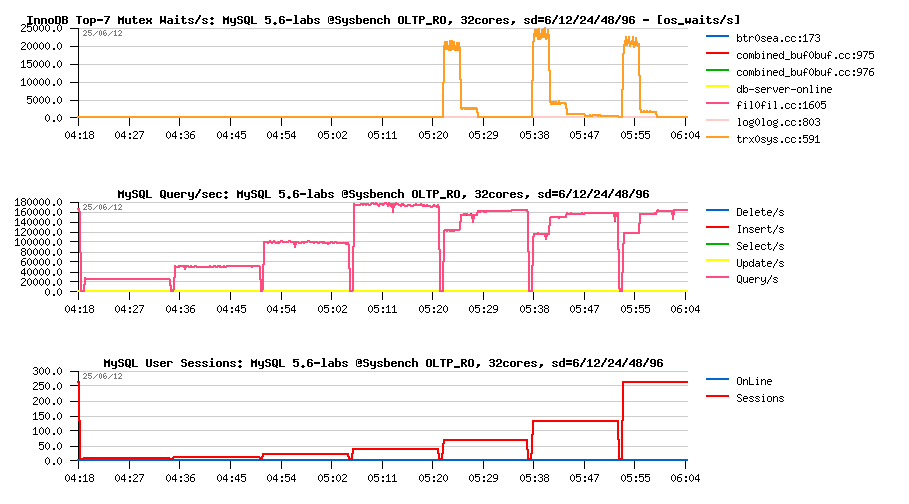

Test

case:

Sysbench OLTP_RO workload

concurrent user sessions: 4, 8, 16, 32, 64, 128, 256

each load level is starting with innodb_spin_wait_delay=6

then, 3 minutes later, innodb_spin_wait_delay is set dynamically to 12

then to 24, 48 and 96

Observations :

first of all let's note a near linear scalability on the results up to

32 concurrent users! (I still remember the time when on 32cores

performance was only worse, and on 32 users the result was always

worse than on 16 ;-)) and the true changes came with MySQL 5.5, and

then it's only continuing ;-))

then, since 64 users, the impact of innodb_spin_wait_delay (sd) become

very visible:

with sd=6 we're blocked on 120K QPS

with sd=12 we're reaching 150-160K QPS

and finally with sd=96 just slightly more than 160K QPS

however, there is a huge gap between 120K and 160K QPS ;-))

and as you can see from the upper graph -- the regression is due

increased contention on the TRX mutex with a growing workload..

NOTE: the default value of innodb_spin_wait_delay in MySQL 5.5 is 6, so

it'll be changes since MySQL 5.6, and for sure it'll be bigger than 6 ;-))

But

what exactly the meaning of this setting within InnoDB?..

when InnoDB is needing to acquire a mutex, the code will spin little

bit if this mutex cannot be acquired at once (spin - means do several

loops with short sleeps and try to acquire it again)

the "innodb_sync_spin_loops" setting is telling how many such loops

should be involved (default: 30)

and the "innodb_spin_wait_delay" setting is simply defining how wide

the range of the sleep interval should be used to choose (randomly) a

value for sleeping (so, setting innodb_spin_wait_delay=96 doesn't mean

that InnoDB will sleep 96us, but just a randomly chosen value between

0 and 96 microseconds)

This reminds me the discussion with Vadim last year about his observations

on the innodb_sync_spin_loops impact while hitting kernel_mutex

contention. And I also think that these spin related settings should be

auto-adaptable and used per mutex rather globally. As well, according my

observations, the spin delay setting is having not the same impact on

mutexes as on RW-locks. But well, each thing on its time.. ;-))

LOCK_open

contention removed

And only then, after all this improved

stuff, we finally were able finally to see the benefit of the latest

improvement in MySQL server code and made by Mikael and Dmitry Lenev --

split of LOCK_open mutex contention!.. This gave us yet another 10-15%

performance improvement!

And then we're hitting the MDL locks

contention.. well, as expected ;-))

So, as you see, MySQL 5.6 is

coming with huge improvements in performance! However for the moment the

"innodb_spin_wait_delay" is playing a significant role for InnoDB

performance tuning.

Let's go little bit further now, and see what

happens now to other stuff like AHI, and where we're now with an overall

scalability limits..

Adaptive Hashing Index (AHI) and Spin Wait

Delay impact

Keeping in mind we're hitting real contentions

since now, the question which is coming back again is: use or not to use

Adaptive Hash Index (AHI) ?.. - in many workloads its contention on the btr_search_latch

is coming on the top position, however I'm not really sure that waits

reported on it by InnoDB are the true waits.. Also, some are claiming to

ss a better performance when AHI is turned off. Well, let's see the impact

of AHI and its relation with spin wait delay (sd) on the following tests:

Test

scenario:

workloads: dbSTRESS RO, Sysbench RO S-ranges, Sysbench OLTP_RO

server: 32cores Intel, 128GB RAM

concurrent users: 8, 16, 32, .. 512

configs:

ahi=on, sd=12 (spin delay)

ahi=on, sd=24

ahi=on, sd=96

ahi=off, sd=12

ahi=off, sd=24

ahi=off, sd=96

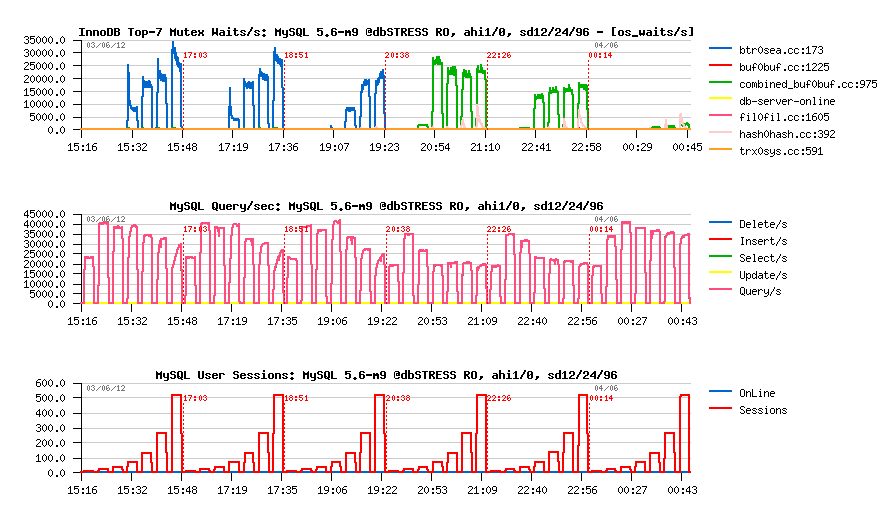

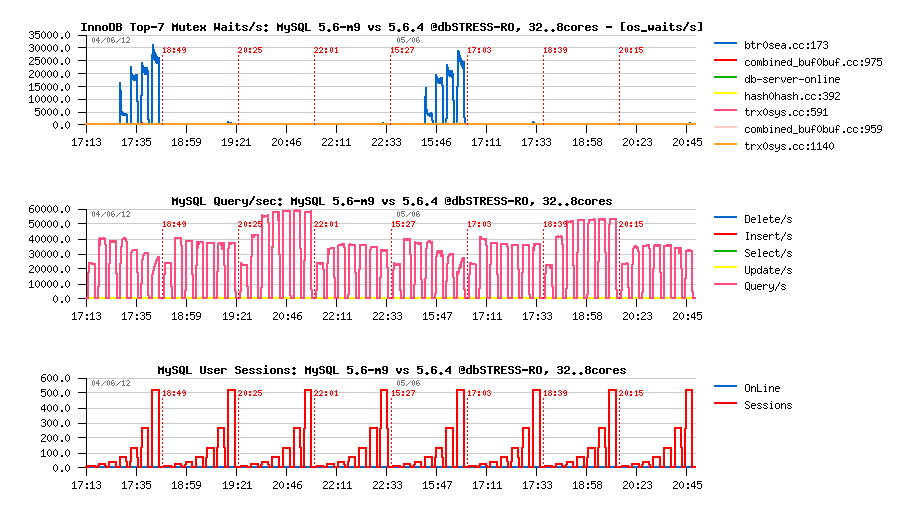

dbSTRESS RO:

Observations

:

with AHI=On the main contention is on the btr_search_latch RW-lock,

and setting spin delay=12 or 24 is looking like the most optimal

with AHI=Off the main contention is moving to the Buffer Pool mutexes,

and setting spin delay=96 is the most appropriate..

NOTE: interesting that with AHI disabled performance is still not

better..

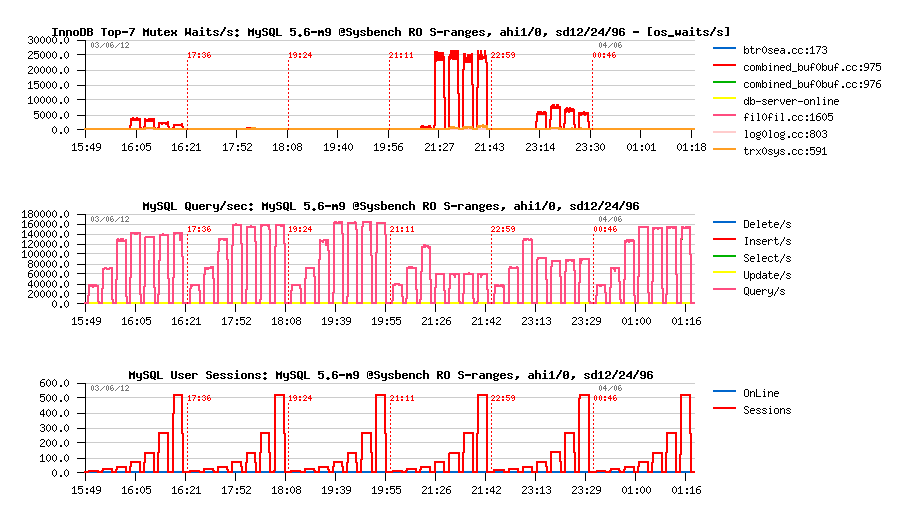

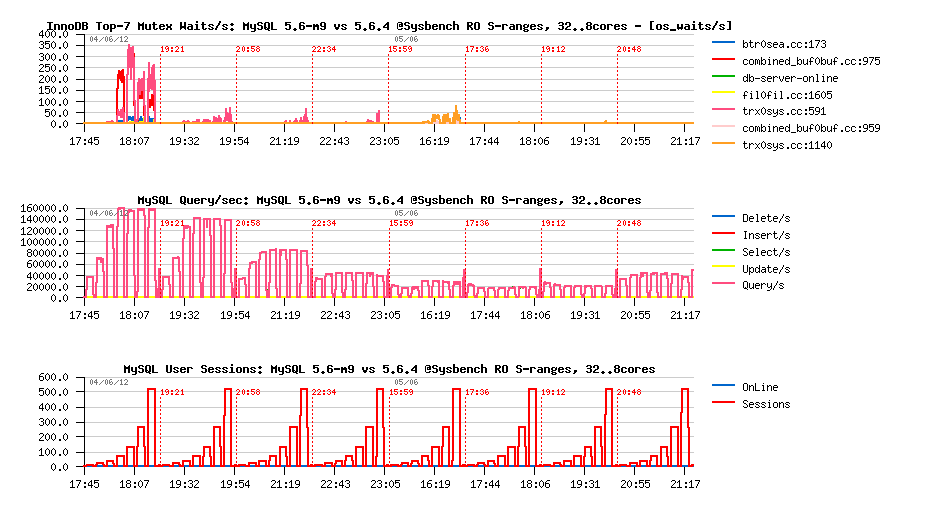

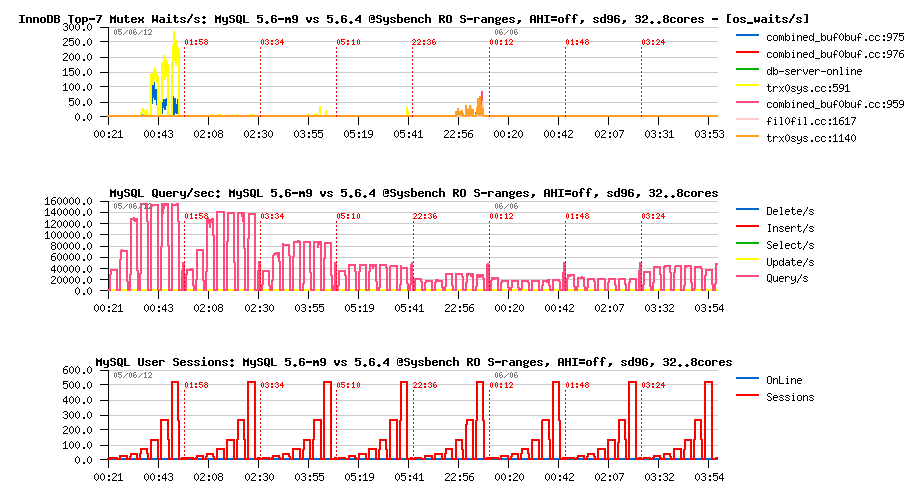

Sysbench RO S-ranges:

Observations

:

setting spin delay=96 giving the best results for both AHI=On and

AHI=Off

interesting that combination of AHI=On + spin delay=96 is giving the

best result here

also, independently to AHI setting (On/Off), the main contention on

this workload is remaining on the Buffer Pool mutex..

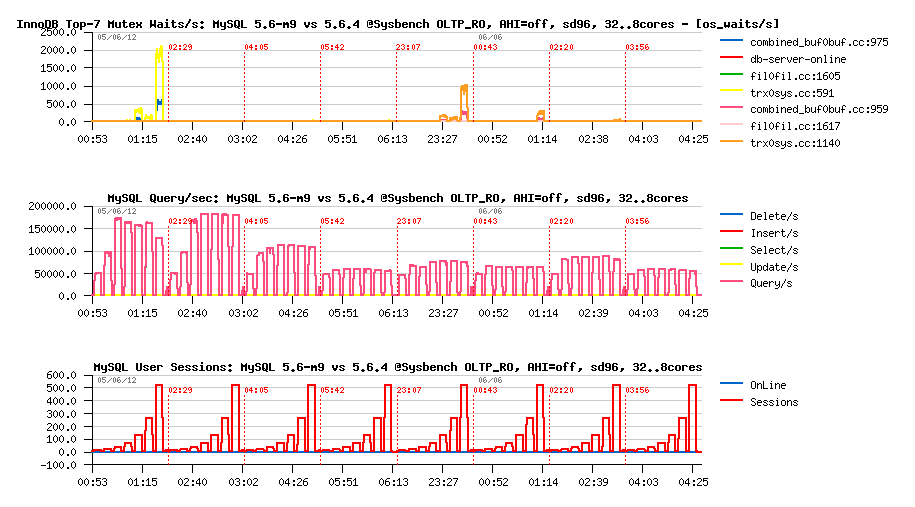

Sysbench OLTP_RO:

Observations

:

setting spin delay to 24 or 96 is giving the best overall result with

both AHI=On and AHI=Off

TRX sys mutex seems to be the main contention on this workload..

Analyzing Read-Only Scalability on 32cores Server

So, from

the previous results, seems to me that for the scalability tests I have to

use spin wait delay set to 24 when AHI=On, and 96 when AHI=Off. Let's get

a look on results with AHI=On first.

Test scenario:

MySQL 5.6-m9 vs 5.6.4

setting: AHI=On, spin wait delay=24

workloads: dbSTRESS-RO, Sysbench RO S-ranges, Sysbench OLTP_RO

concurrent users: 8, 16, 32 .. 512

same 32cores server, but each test case is repeated with MySQL server

bound via "taskset" on:

#1 -- all 32cores bi-thread (no binding, all 4 sockets are used)

#2 -- 32cores single-thread (binding on 4 sockets, one thread from

each core)

#3 -- 16cores single-thread (binding on 2 sockets, one thread from

each core)

#4 -- 8cores single-thread (binding on 1 socket, one thread from

each core)

So, to keep it compact, on the following graphs you'll see 8 test series:

first 4 parts of graphs are corresponding to MySQL 5.6-m9 results

within #1, #2, #3 and #4 config

then the same test series, but for MySQL 5.6.4..

dbSTRESS-RO:

Observations

:

first surprise is to see the contention on "btr_search_latch"

completely disappearing from InnoDB reporting once only one thread per

core is used..

then, it's sure, there is still something going wrong, as on 16cores

we have a better results vs 32cores.. - I'm surprised myself how often

over a time I'm hitting various MySQL limits while testing dbSTRESS

workload ;-))

at least the positive thing is that MySQL 5.6-m9 is out-passing 5.6.4

;-)

well, dbSTRESS-RO should be yet more investigated..

Sysbench RO S-Ranges:

Observations

Observations :

these graphs are at least looking like I've expected ;-)

the best result on 5.6-m9 is when all 32cores with all core threads

are used

having a second thread on CPU core is helping in 5.6-m9

scalability is nor perfect on 5.6-m9, but performance is constantly

growing with more cores available ;-)

while on 5.6.4 the best result is reached while only one CPU socket is

used (hitting the cache line issue fixed within April's 5.6-labs)..

up to x6 times performance improvement in 5.6-m9, which is really good

;-)

Sysbench OLTP_RO:

Observations

:

5.6-m9 performance on 32cores is better than on 32cores bi-thread,

seems that TRX sys mutex contention is increasing in this case..

otherwise, pretty good scalability on 5.6-m9 while moving from 8 to 16

and 32cores

while 5.6.4 is getting a better performance on 16 vs 8cores, but

loosing it on 32cores again (hitting here CPU cache line issue + other

internal contentions)..

up to x3 times better performance on 5.6-m9 vs 5.6.4 ;-))

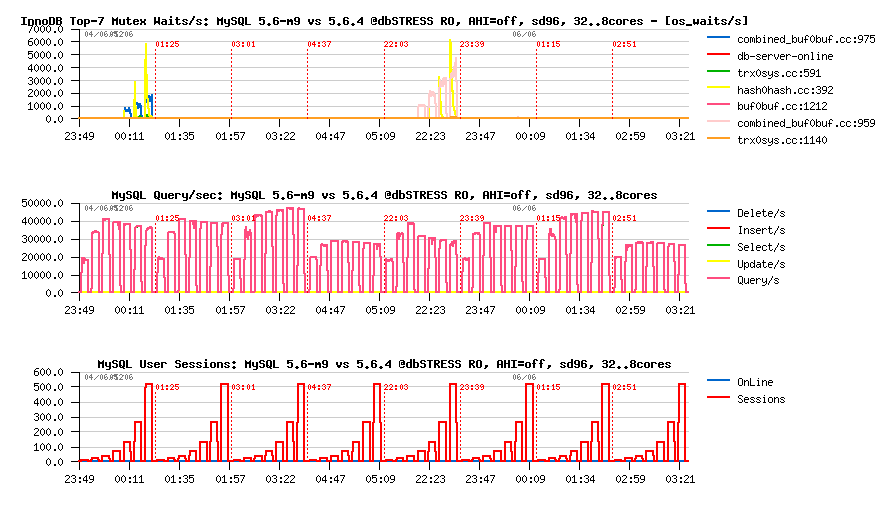

The same tests now, but with AHI=Off and spin delay=96

NOTE:

Interesting that results with disabled AHI are quite similar to those with

AHI=On, but performance is still better when AHI is turned ON. And, yes,

it's clear I'm hitting another "hidden bottleneck" within MySQL 5.6 on

dbSTRESS workload..

dbSTRESS-RO, AHI=Off, sd=96:

Sysbench

RO S-ranges, AHI=Off, sd=96:

Sysbench

OLTP_RO, AHI=Off, sd=96:

What

else to add?..

Just express my personal kudos to Sunny, Mikael and

Dmitry! and the whole MySQL Team! ;-))

Then, work is continuing,

and new challenges are already waiting for us.. ;-))

As usually,

comments are welcome!

Rgds,

-Dimitri

PlanetMySQL Voting:

Vote UP /

Vote DOWN

DIGITAL JUICE

No comments:

Post a Comment

Thank's!