The Enkitec Extreme Exadata Expo (E4) event is over, but I still have plenty to say about the technology. The event was a great success, with plenty of interesting speakers and presentations. As I said in a previous note, I was particularly keen to hear Frits Hoogland’s comments on Exadata and OLTP, Richard Foote on Indexes, and Maria Colgan’s comments on how Oracle is making changes to the optimizer to understand Exadata a little better.

All three presentations were interesting – but Maria’s was possiby the most important (and entertaining). In particular she told us about two patches for 11.2.0.3, one current and one that is yet to be released (unfortunately I forgot to take note of the patch numbers).

The patch that is currently available introduces a new option for dbms_stats.gather_system_stats(). Pass in the parameter value ‘Exadata’ and (along with any other steps) the code will set the MBRC stored statistic to 128. If this looks like a familiar idea, check this comment on an earlier post:

It’s slightly worrying that when you set up a (datawarehouse) system on Exadata it might be a good idea – at least in the short term – to set thedb_file_multiblock_read_count to 128 because you really do want Oracle to think that tablescans are, in general, a pretty good fast option – we’ve only just got ver persuading people that you shouldn’t set this parameter in any version of 10g or above

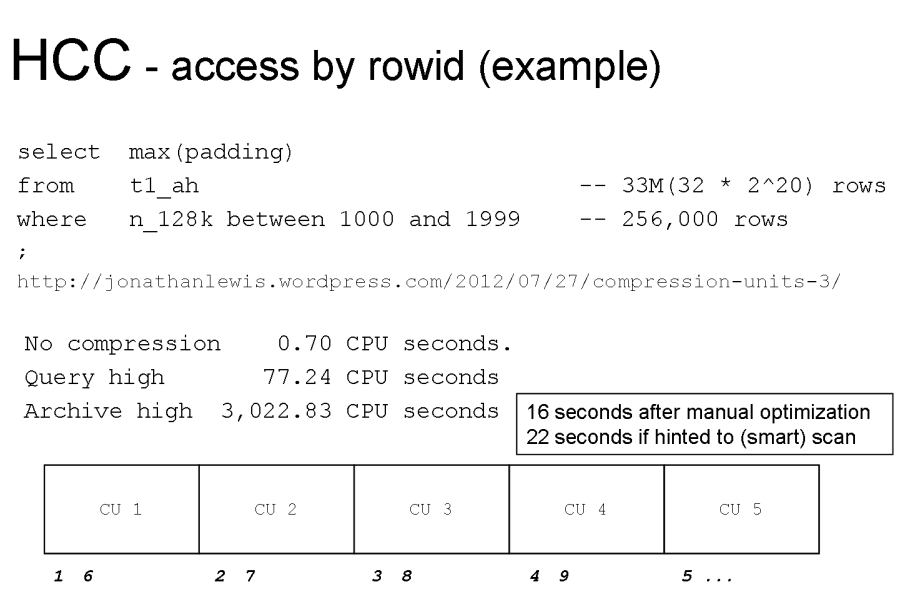

It’s nice to know that with the right patch we now have a proper way to get the effect we need. (I can’t help thinking, though, that since a scan in Exadata is effectively “Parallel N” when N is the number of cell servers, it might be even better if the fix changed the MBRC to allow for a significant percentage of N.)The patch that is not yet available addresses the problem I described in the same post, that a sample query took 0.7 seconds to complete on an uncompressed table, 77 seconds if the table was compressed at “query high”, and 3,000 seconds when compressed at “archive high.” Here’s the slide I presented at the conference, where I explained why compression made the queries run so slowly, and how I had reduced the run-time from 3,000 seconds to 16 seconds by optimising the query:

As you can see, the query is just “select from single table by index range scan”. The bottom half of the slide gives you an indication of why the performance is so bad – the numbers give an indication of how the data is scattered through the table, and represent the order in which the rows are fetched as the query walks the index; and every “block” in the picture is a separate compression unit, which has to be de-compressed each time it is visited. Because of the data pattern, and because Exadata will only keep one decompressed unit in the PGA at any moment, my query decompresses one compression unit for every single row fetched – and that’s a lot of CPU disappearing from the compute node of an Exadata system.

So how can you rewrite the query to optimise it ? Here’s how – and it’s a method that can have an effect whether or not you’re using Exadata, but it’s only with HCC that you’re really likely to see a dramatic difference in CPU usage (in more traditional systems it can have quite an impact on physical I/O):

select

/*+

leading(v1 t1_ah)

use_nl(t1_ah)

monitor

*/

max(t1_ah.padding)

from

(

select /*+ index(t1_ah) no_merge no_eliminate_oby */

rowid r1

from

t1_ah

where

n_128k between 1000 and 1999

order by

r1

) v1,

t1_ah

where

t1_ah.rowid = v1.r1

;

It’s my old “two-step” approach to visiting tables and indexes. Get the rowids you really need, and visit the table later. In this case, though, I’ve sorted the rowids before I visit the data. The effect of this sort is that I visit each table block (or, in the case of Exadata, each Compression Unit) just once, and pin it (or, in Exadata, keep the decompressed unit in the PGA) until I’ve extracted all the rows from it that I need.

So, you might ask, how will the patch optimise for indexed access in Exadata ? The optimizer will be allowed to introduce a new operation between an “index range scan” and a “table access by rowid” – and the operation will be called (something like) “sort by datablock address”. (And if you think I’m feeling smug about pre-empting the optimizer group, you’d be right – but then I don’t have to change and test the source code, of course, so I had a timing advantage.)

Footnote: When I saw the “index pre-fetching” change to the nested loop join in Oracle 9i (and that’s long before the NLJ_batching feature of 11g), I had assumed that this sorting step was part of the strategy. So the Exadata strategy looks like something I’ve been waiting for for the last 10 years or so.

DIGITAL JUICE

No comments:

Post a Comment

Thank's!